Goal and motivation of project

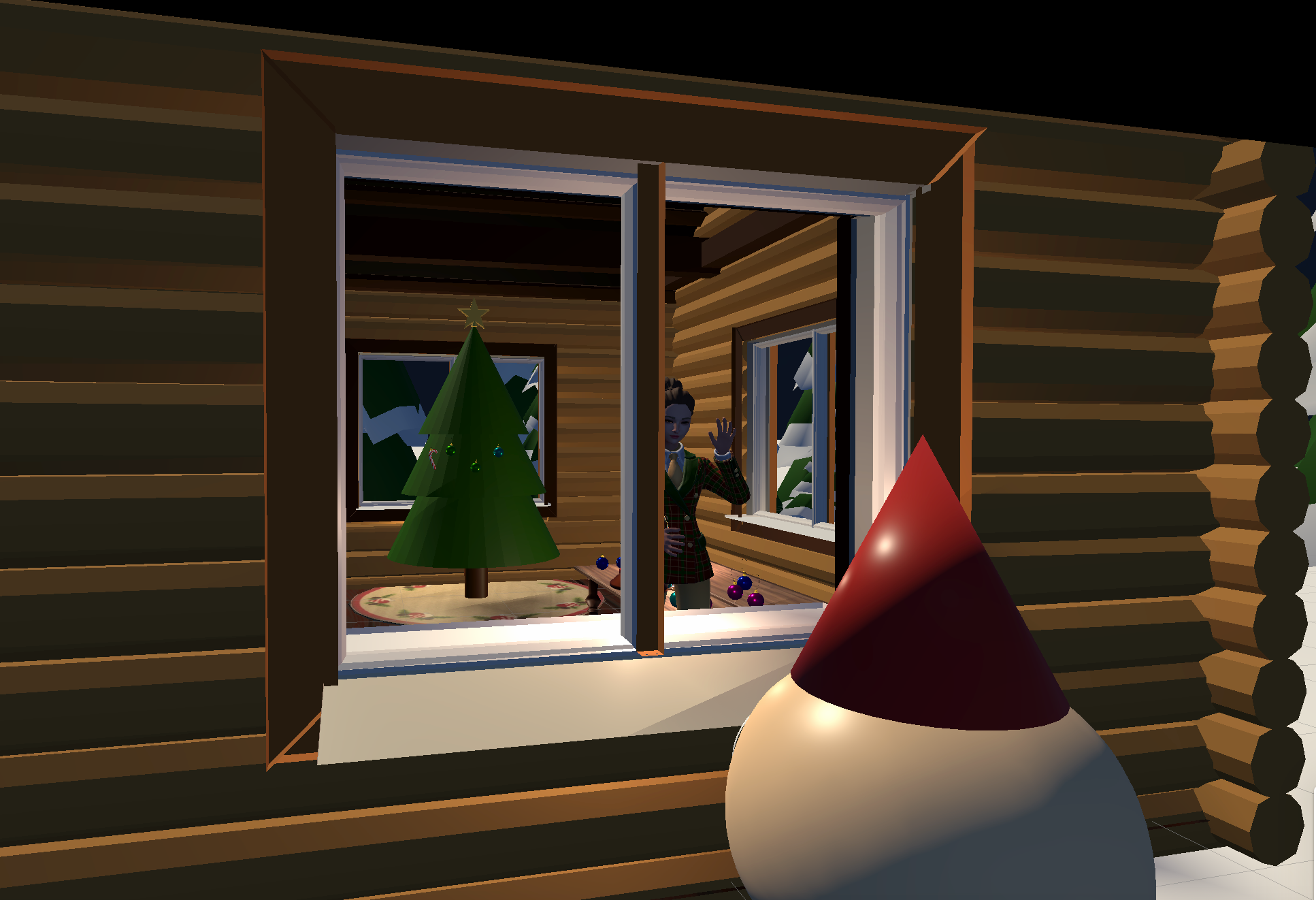

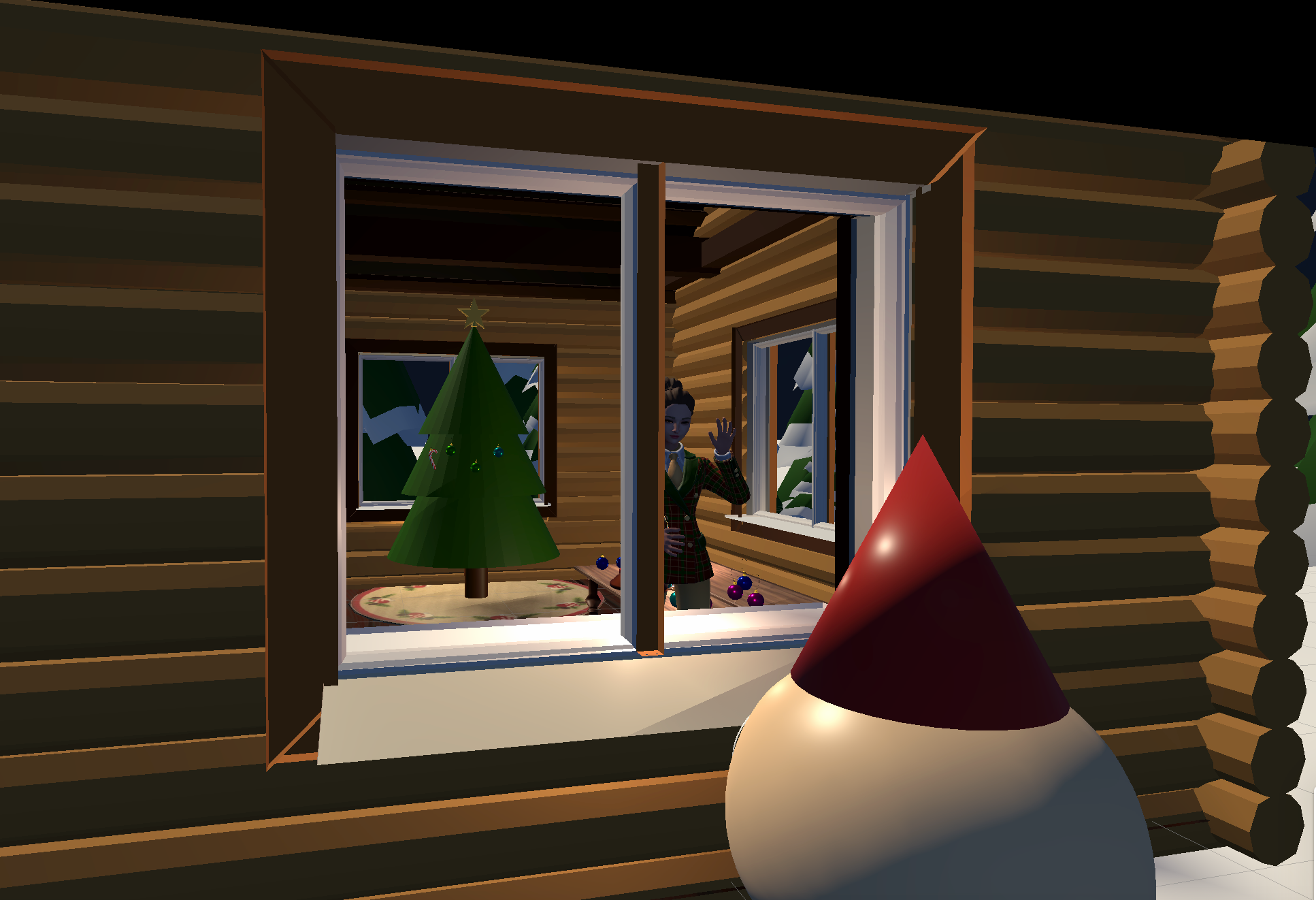

Elf & Myself is a Christmas experience that bridges the gap between the

virtual- and outside world. A VR-user steps into a cozy christmas cabin

where they can decorate a tree, while an outside user takes the role of

an elf that peeks into the windows of the cabin and controls their point

of view through the gyroscope in a smartphone.

One of the motivations behind Elf & Myself was that we wanted to close

the distance by breaking the wall between the VR-player and the

audience. VR is usually quite an isolated experience where interactions

with the outside world and observers is non-existent or restricted.

Instead of merely casting the HMD-users view to a public screen, we

wanted to provide the outside observer with a higher sense of immersion

by allowing them “peep” into the VR-world and control their point of

view through embodied interaction. The outside spectator’s position was

also visualized in the VR-space.

Our goal was therefore to create a closer connection between the

audience and the player immersed in VR. Whilst doing this we wanted to

deepen our knowledge in graphics and interactions by exploring new

techniques and novel means of interaction. We also wanted to work with

lights and shadows to create a more realistic, immersive experience and

to create a cozy atmosphere in line with our Christmas theme. It was

created as a part of the course Advanced Graphics and Interaction in

just 4 weeks during the winter of 2022.

Graphics and interaction technologies used and developed

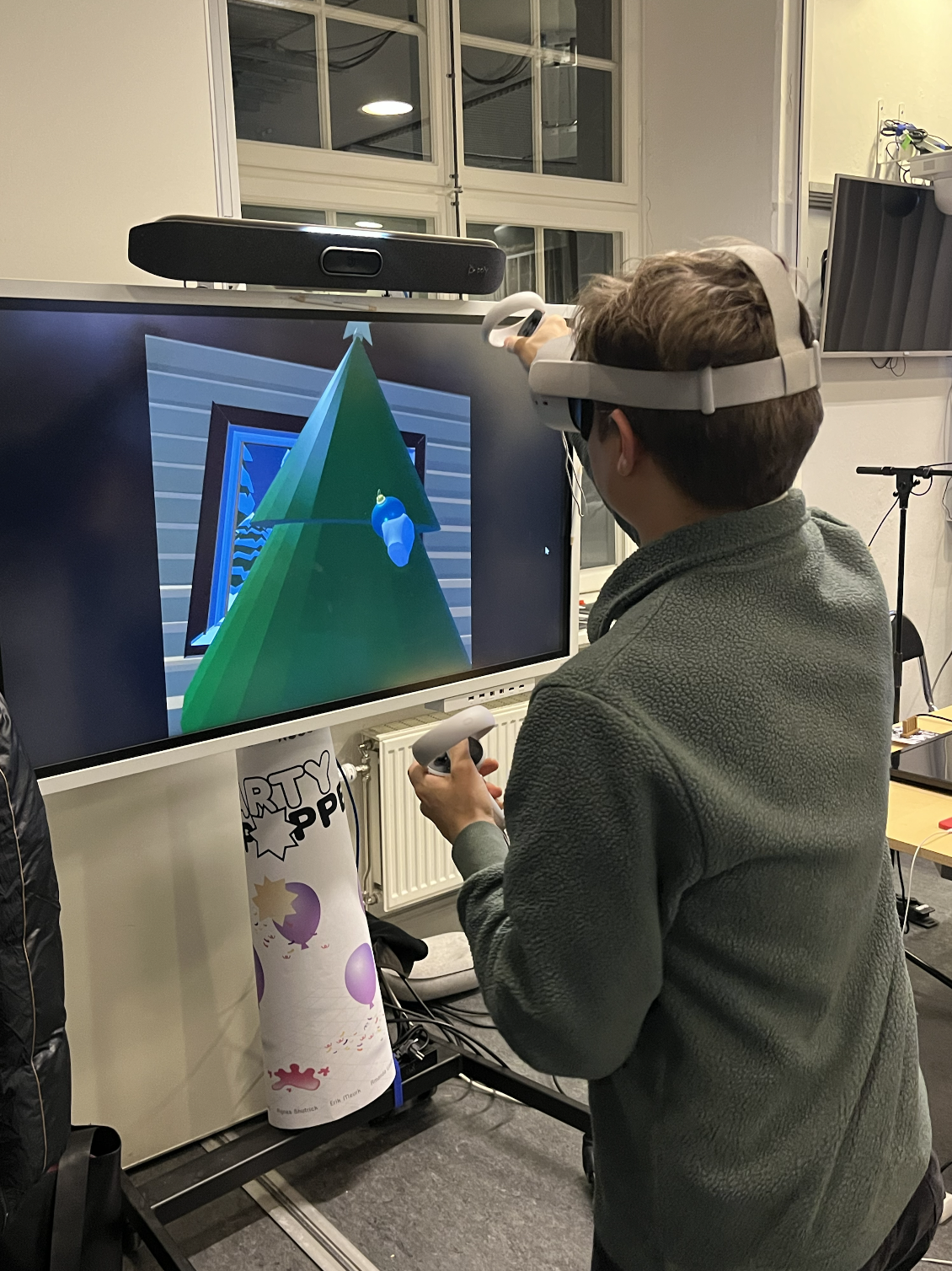

The software Ready Player Me offers customizable 3D avatars created for

VR experiences (especially the Metaverse). The avatar was imported using

the Ready Player Me SDK and acts like a normal 3D asset in Unity. For

the skeletal movement of the avatar, the Animation Rigging package was

used. Animation rigging was used to define the bone structure of the

character and assigning skin weights to the mesh, which determines how

the vertices of the mesh are deformed when the bones move. Once the

character was rigged, it could be animated by moving the bones in the

hierarchy, which will cause the mesh to deform and create movements. For

us, the movements of the avatar were based on the hands and head

movements of the player connected with the Oculus VR kit. Using the

avatar from Ready Player Me allowed us to spend less time on advanced

skeleton modeling in Blender and more time on other graphics and

interactions that were crucial for the experience.

To synchronize Elf & Myself between the VR-player and the audience, we

first wanted to videostream an in-game camera from Unity that the

audience could see. The problem was that we had to stream from the

built-in app in the Oculus Quest 2 headset. This showed to be tricky, as

there were no current solutions to stream an in-game camera from an

Android build, only from PC. Instead, we used NormCore to keep the state

of all clients the same. Normcore is a networking plugin and hosting

service created by Normal and is built to enable multiplayer gameplay in

a quick and efficient way. Using NormCore, we could use different

scripts and solutions to send data about different objects' orientation

in space to keep everything up to date, showing the same gameplay on

both the audience and VR-player view.

All models were created using Blender, additionally some procedural

textures were developed, such as the gingerbread-cookie and the

fireplace stone. In order to export and import the procedural textures

from Blender to Unity, the objects were UV unwrapped and the textures

baked into images, a diffuse map for color information and a normal map.

These were then applied to the imported model in Unity. This not only

enabled us to use the procedural textures created in Blender, but can

also save render time.

We did use some external resources. The crackling noise of the fireplace

is from freesound.org. The avatar is from Ready Player Me, which is a

Metaverse avatar creator.

Challenges & Obstacles

In order to break down the wall between the HMD user and the outside

spectator we deemed it critical to create realistic avatars representing

both users. We decided to use the Oculus Quest 2 since we are familiar

with it and due to the mobility afforded by it being able to run it

cordless. However, that also meant we only had three points of tracking

which was a big challenge when creating the representation of the

VR-player. It is evident that it is very challenging, if not impossible,

to create fully realistic human movement based on only three points of

tracking. With regards to the outside spectator we only had one

dimension of tracking - the rotation, so it was also a big challenge to

represent that user in VR.

Due to the time restriction of this project, it was also a challenge to

prioritize the work and still explore advanced and, to us, novel

graphics and interaction techniques. Based on previous experience, we

knew that when working with the Oculus Quest 2, another big challenge is

to balance graphics and performance.

The biggest obstacle was the restricted time we had to develop this

project, 4 weeks. As mentioned, we had to prioritize our work. The end

product has managed to achieve what we set out to do, that is to narrow

the bridge between the virtual- and outside-world using embodied

interaction. However, it would have benefited from more throughout user

testing since it does contain some bugs and is somewhat unreliable.

Another obstacle was that all of us advanced our Blender skills out of

interest and therefore wanted to add more things into the scene which

was troubling for the headsets performance. When putting everything

together, things had to be recreated into more low poly versions in

order to work together.

Lessons learned

For this project, we really had to adapt to the limitations of the

Oculus Quest 2 graphic constraints, and learned how we could lower the

resolution of our 3d models to work around this. For example, the

surrounding trees initially had snow on them but each tree was then made

up of 33 thousand vertices. When replacing the snow by just adding white

cones and adding a decimate modifier reduced the number of vertices to

≈250. Also, we used baked lightning and used low resolution shadows in

order for the game to run smoothly. This taught us of the importance of

always testing our stuff in the final environment it is intended for,

which in this case was VR, so you don’t fumble in the dark with how the

meshes and textures you’ve created might impact the performance.

Additionally, we learnt the importance of being careful with scripts.

One script that we had caused a major performance issue as an if

statement was checked indefinitely. When that was fixed, the performance

increased tremendously, so we will be more careful about how we code our

scripts in the future.

We also learned how to bake textures in Blender. Although it is quite a

time consuming and unintuitive process, it enabled us to use the

procedural textures created in Blender. This also didn’t affect the

performance of the headset as much as other graphics.

As it was everyone’s first time making a multiplayer game, we learnt how

tricky it could be to synchronize all the objects between the clients.

If two clients were interacting with an object at the same time, they

interfered with each other and a lot of bugs started to show. We managed

to solve the synchronization issues by having the clients claim

ownership of an object when interacted with, and that way two clients

couldn’t interact with an object at the same time.

To allow the outside observer to control their point of view on a fixed

screen through embodied interaction, we used the gyroscope sensor in a

smartphone. We tried making this compatible for both iPhone and Android,

but struggled with Apple’s security measures and decided to only

implement it for Android. Using the Unity Remote 5 app we learned how to

connect the phone to the Unity Editor on the computer and access the

gyroscope data. We then calibrated the data to make sense in relation to

the phone's rotation and the rotation of the camera in the VR scene. The

resulting rotational values were not only applied to control the camera

in the scene but also used to transform the rotation of the Elf’s head.

Finally, we realized how difficult it can be to rig an avatar in VR. As

you only have the headset and the two controllers, it makes it hard and

essentially impossible to mimic the movement of arms and legs in VR. We

understood why many games have chosen to hide the legs of avatars, and

to not let the player themselves see their own arms. It will be

interesting to see how this problem will be solved in the future.